Dominic Cronin's weblog

New Tridion cookbook article: Recursive walk of Tridion tree

I'm still trying to get the important parts of my Tridion developer summit talk online. With a code-based demo like that, sharing the slides is pretty pointless, so I'm putting the code on-line where ever it makes sense. So far this has been in the Tridion cookbook. Here's the latest

https://github.com/TridionPractice/tridion-practice/wiki/Recursive-walk-of-Tridion-tree

The thing that really triggered me to get this on-line was that someone had recently asked me if it was possible to query Tridion to find items that were local to a publication rather than shared from higher in the BluePrint. With the tree walk in place, this becomes almost trivial. (I'm not saying that there aren't better ways to get the list of items to process, but the tree walk certainly works.)

So having got the items into a variable following the technique in the recipe, finding the shared items becomes as simple as:

$items | ? {$_.BluePrintInfo.IsShared}

But it might be more productive to throw all the items into a spreadsheet along with the relevant parts of their BluePrint Info:

$items | select Title, Id, @{n="IsShared";e={$_.BluePrintInfo.IsShared}}, `

@{n="IsLocalized";e={$_.BluePrintInfo.IsLocalized}} `

| Export-csv blueprintInfo.csv

Am I the only one that finds this fun? It's fun, right! :-)

New Tridion Cookbook article: Set up publication targets

In my "Talking to Tridion" session at the Tridion Developer Summit this year, one of the things I demonstrated was a script to automatically set up publication targets in Tridion. I'm now finally getting round to putting the talk materials on-line, and this one seemed a good candidate to become a recipe in the Tridion Cookbook. So if you are feeling curious, get yourself over to Tridion Practice and have a look. The new recipe is to be found here.

Managing the Tridion Core service powershell module as a git submodule

N.B. Peter has changed the structure of the module (as he has every right to do, and I'm not complaining) - what this means is that this blog post is pretty useless other than as an exercise in poking at things. Maybe I'll figure it out, but in the meantime, assume that this technique won't work.

I spend quite some time fiddling with various powershell implementations on my Tridion image. Whenever there's a place where I do experimental things like this, I run the risk that I'm going to break something so, at the very least, I usually do a quick "git init" in the directory, add the files and commit them. Then I have the benefit of version diffs and rollbacks if I need them. The next step comes when I realise that it's something I'm going to work on over a longer time, and that I really would prefer not to lose. At this point, I usually go on to my linux server and init a bare git, and then push from whereever I'm working.

Today I reached this second phase with the WindowsPowerShell directory of the Administrator account on my Tridion image. (It's about time, because I'm busy preparing a talk for the Tridion Developer summit in a couple of weeks, and well, losing my scripts would put a kink in my plans, to say the least.

In any case, I'd realised that I was running quite an old version of Peter Kjaer's Tridion-CoreService module. This module is the basis of pretty much any effort to use the Tridion core service from the powershell, and as this is the subject of my upcoming talk, I figured I should at least be doing my demos on the current version.

If you go to the github page for the module, you'll see that Peter's provided installation scripts which will help you to get up and running, but of course, if you have git installed, it makes just as much sense to clone the module directly. The only problem I had was that Modules are normally located in the Modules directory under the WindowsPowerShell directory. (You can add other locations to env:PSModulePath, but for what I wanted, that wasn't ideal.)

Fortunately, GIT is widely used for projects that make use of other projects, and there is very good support built in, by way of git-submodule. As my main git repository for the powershell stuff is directly in the WindowsPowerShell directory, all I needed to do was add Peter's module as a submodule with the right path.

In fact I just clicked on the menu option in Tortoise Git, but the basic command looks something like this:

git submodule add --name Modules/Tridion-CoreService git@github.com:pkjaer/tridion-powershell-modules.git Modules\Tridion-CoreService

With this in place, git understands that the Tridion-CoreService code belongs to Peter's module, and if he releases a new version, I can just pull. And of course, my own changes go in my own repository. Adding a submodule adds a .gitmodules file in your repository, so if I ever clone my WindowsPowerShell repository into another server, the location of Peter's repository can be retrieved, and the files pulled from there.

One word of warning. This is not the official release process for the Tridion-CoreService module. That is described here. As the module is pretty much a one-man affair, it's not unreasonable that there's only the master branch, so pulling from it is at your own risk. Personally I'm happy with the small risk, as it helps me to keep my development system a bit tidier - and heck - if it breaks, we'll fix it!

.

Getting the complete component XML

One of the basic operations that a Tridion developer needs to be able to do is getting the full XML of a Component. Sometimes you only need the content, but say, for example that you're writing an XSLT that transforms the full Component document - you need to be able to get an accurate representation of the underlying storage format (OK - for now let's just skate over the fact that different versions have different XML formats under the water)

In the balmy days of early R5 versions, this was pretty easy to do. The Tridion installation included a "protocol handler", which meant that if you just pasted a TCM URI into the address bar of your browser, you'd get the XML of that item displayed in the browser. This functionality was actually present so that you could reference Tridion items via the document() function in an XSLT, but this was a pretty useful side effect. OK... you had to be on the server itself, but hey - that's not usually so hard for a developer. If you couldn't get on the server, or you found it less convenient, another option was to configure the GUI to be in debug mode, and you'd get an extra button that opened up some "secret" dialogs that gave you access to, among other things, the XML of the item you had open in the GUI.

Moving on a little to the present day, things are a bit different. Tridion versions since 2011 have a completely different GUI, and XSTL transforms are usually done via the .NET framework, which has other ways of supporting access to "arbitrary" URIs in your XSLT. The GUI itself is built on a framework of supported APIs, but doesn't have a secret "debug" setting. However, this isn't a problem, because all modern browsers come fully loaded with pretty powerful debugging tools.

So how do we go about getting the XML if we're running an up-to-date version of Tridion? This question cropped up just a couple of days ago on my current project, where there's an upgrade from Tridion 2009 to 2013 going on. I didn't really have a simple answer - so here's how the complicated answer goes:

My first option when "talking to Tridion" is usually the core service. The TOM.NET API will give you the XML of an item directly via the .ToXml() methods. Unfortunately, someone chose not to surface this in the core service API. Don't ask me why? Anyway - for this kind of development work, you could use the TOM.NET. You're not really supposed to use the TOM.NET for code that isn't hosted by Tridion (such as templates) but on your development server, what the eye doesn't see the heart won't grieve over. Of course, in production code, you should take SDL's advice on such things rather more seriously. But we're not reduced to that just yet.

Firstly, a brief stop along the way to explain how we solved the problem in the short term. Simply enough - we just fired up a powershell and used it to access the good-old-fashioned TOM.COM. Like this:

PS C:\> $tdse = new-object -com TDS.TDSE

PS C:\> $tdse.GetObject("tcm:2115-5977",1).GetXml(1919)

Simple enough, and it gets the job done... but did I mention? We have the legacy pack installed, and I don't suppose this would work unless you have.

So can it be done at all with the core service? Actually, it can, but you have to piece the various parts together yourself. I did this once, a long time ago, and if you're interested, you can check out my ComponentFactory class over on a long lost branch of the Tridion power tools project. But that's probably too much fuss for day to day work. Maybe there are interesting possibilities for a powershell module to make it easier, but again.... not today.

But thinking about these things triggered me to remember the Power tools project. One of the power tools integrates an extra tab into your item popup, giving you the raw XML view. I'd been thinking to myself that the GUI API (Anguilla) probably had reasonably easy support for what we're trying to do, but I didn't want to go to the effort of figuring it all out. Never fear: after a quick poke around in the sources I found a file called ItemXmlTab.ascx.js, and there I found the following gem:

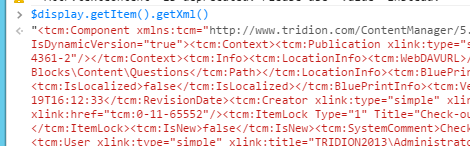

var xmlSource = $display.getItem().getXml();

That's all you need. The thing is... the power tool is great. It does what it says on the box, and as far as I'm concerned, it's an exceedingly good idea to install it on your development server. But still, there are reasons why you might not. Your server might be managed by someone else, and they might not be so keen, or you might be doing some GUI extension development yourself and want to keep a clear field of view without other people's extensions cluttering up the system. Whatever - sometimes it won't be there, and you'd still like to be able to just suck that goodness out of Tridion.

Fortunately - it's not a problem. Remember when I said most modern browsers have good development tools? We use them all the time, right? F12 in pretty much any browser will get you there - then you need to be able to find the console. Some browsers will take you straight there with Ctrl+Shift+J. So you just open the relevant Tridion item, go to the console and grab the XML. Here's a screenshot from my dev image.

So now you can get the XML of an item on pretty much any modern Tridion system without installing a thing. Cool, eh? Now some of you at the back are throwing things and muttering something about shouldn't it be a bookmarklet? Yes it should. That's somewhere on my list, unless you beat me to it.

On-line resources for the Agile Tridion Development talk

Links to on-line resources for the Agile Tridion Development talk I gave at the Tridion Developer summit 2014, Amsterdam.

How to set Rights and Permissions using the SDL Tridion core service API

I've just published a new post on the Indivirtual blog that explains How to set Rights and Permissions using the SDL Tridion core service API

Another blog post at Indivirtual - SDL Tridion's ImportExport API

I've just published another blog post at Indivirtual. This time it's about the ImportExport API. As you might guess - I had to have a go using the powershell. Here's the link:

http://www.indivirtual.nl/blog/sdl-tridions-importexport-api-end-content-porter/

Getting IIS Express to run in a 64 bit process, and other fun Tridion content delivery configurations

In the last couple of days, I've spent far more time than I'd like figuring out how to get a Tridion-based web application to run correctly under Visual Studio. There are three basic choices:

- Run it directly using Visual Studio

- Run it using IIS Express

- Run it using IIS (non-Express version)

As the application is intended to run on a 64 bit architecture, there are some challenges. Visual Studio runs in 32 bit mode, so the first option is out. Using full-on IIS is an attractive thought; you can manually configure the application pool to run in 64 bit mode. Unfortunately, getting a debug session up and running takes more configuration than that. You have to set up the web site correctly, and it was just too fiddly. I ran out of time, or steam or whatever. (Somebody will probably tell me it's easy, and I dare say it is when you know how, and aren't spending time you really should be spending on something else. Any hints are always welcome.)

Of course, with a Tridion site, half the game is making sure you have the correct DLLs in place for the processor architecture you are using. Along the way, I discovered that the quick and dirty way to tell if you have a 32 or 64 bit version of xmogrt.dll (Juggernet's "native" layer) is the size. The 64 bit version comes in at 1600KB and the 32 bit version is about half that at 800KB or so. This varies from version to version, so on a 2013 system, it's 1200ish/900ish KB, but once you get the hang of it, you can tell them apart at sight, which is pretty useful. The other DLLs are also important, although as far as I can tell, only Tridion.ContentDelivery.AmbientData.dll is hard-compiled for 64 bit architecture, at least on the 2011 system I was working on. The rest of the .NET assemblies are compiled to MSIL, which of course, will run on either architecture.

But I digress. The thing I wanted to blog (and this will definitely be tagged note-to-self) was how to get IIS Express to run in 64 bit mode. By default it runs on 32 bits, but if you follow this link:

... you will find the following nugget of goodness:

You can configure Visual Studio 2012 to use IIS Express 64-bit by setting the following registry key:

reg add HKEY_CURRENT_USER\Software\Microsoft\VisualStudio\11.0\WebProjects /v Use64BitIISExpress /t REG_DWORD /d 1

However, this feature is not supported and has not been fully tested by Microsoft. Improved support for IIS Express 64-bit is under consideration for the next release of Visual Studio.

Very handy indeed. Running under IIS express is just one click of the button. Just works.

And by way of a PS. (Post Script that is, not PowerShell) here's how you find the processor architecture of a DLL (This time on my 2013 image.)

PS C:\inetpub\www.visitorsweb.local\bin> [reflection.assemblyname]::GetAssemblyName((resolve-path '.\Tridion.ContentDelivery.AmbientData.dll')).ProcessorArchitecture MSIL

Well anyway - it's no fun scratching your head over stuff like this. Maybe this helps.

Understanding the eight Powershell profiles (or Nobody expects the Spanish Inquisition)

A while ago, I started using the Windows Powershell ISE, and immediately fell foul of the fact that the scripts I was debugging didn't work because their environment was set up in my $profile. It turns out that the ISE has a separate profile from the one used in the PowerShell proper. It's easy enough to deal with once you know about this, although my first attempt of at working round the problem would have been better if I'm known how easy it is to get the names of the different profile locations. At that time, things would have gone better if I'd have first read this article by Ed Wilson on the Scripting Guy blog: Understanding the Six Powershell Profiles.

In this article, he explains that there are four profiles which are loaded by the PowerShell on startup. You can get the names of them by looking at the $profile variable. The commonest use of this is to do something like:

notepad $profile

to edit your profile. Well as you already know by this point, there are more. You can see these with a little more typing, as follows:

$profile.CurrentUserAllHosts $profile.CurrentUserCurrentHost $profile.AllUsersCurrentHost $profile.AllUsersAllHosts

What you get when you type $profile is the same as $profile.CurrentUserCurrentHost.

So that's four. You can get to six quite easily by understanding that the ISE and the PowerShell are different hosts, so are entitled to specify their own locations for the CurrentHost versions. Of course, having got that far, we can immediately suppose that any application that hosts the PowerShell might also have it's own CurrentHost profiles, so in theory it's limitless. Whatever... I don't have any beef with Ed for saying there are six - he was describing the general case, and doing a good job of helping people avoid a slightly non-obvious pitfall. (Like I said - I wish I'd read his article earlier than I did.)

So there you have it. We can say eight, ten, twelve... whatever.. so what's the point of this blog post? Well it turns out that I managed to uncover a different non-obvious pitfall. Drove me crazy for a while, I can tell you!

I quite often use Vim for text editing, and I thought it would be cool to get it wired up to work with PowerShell. I already had PowerShell syntax highlighting set up, but I wanted to be able to open up files and edit them from within the shell. There's plenty of material on the Internet about how to do this, and I set to with a will. Not too hard - set up a couple of aliases and so forth. But then I found that when I opened up files directly in the shell (instead of spawning the a gVim window) the syntax highlighting combined with the background color of the shell was just awful. If I am in an elevated shell, I have this set to DarkRed, and the code to do this is in my profile. So I went to disable it. Looked in my profile. The colour changing code wasn't there. Then I remembered the other profiles - and that I'd put this code into one of the AllUsers profiles, or at least I thought I had - because it wasn't there either. Well it was definitely executing. If I started the powershell with -noprofile, I got a dark blue background, and without -noprofile, I got red. Hmm... Then I edited all four profiles so that each would emit it's name when executed. Nothing doing! The AllUsers profiles weren't even executing.

Eventually I poked at it some more, and realised that if I used notepad to edit the AllUsers profiles, I could see my colour changing code, but from vi I saw my "This is the AllUsersCurrentHost profile" line. Bingo! You've probably guessed by now. It was a 64bit server, and as the AllUsers profiles are down in C:\Windows\System32, they are mirrored in C:\Windows\SysWOW64\. It therefore depends on whether you are using a 32 bit or 64 bit editor.

So according to Ed's counting system, the total is now eight!

Dumping publication properties to a spreadsheet - a Powershell one-liner

A colleague mentioned to me today that he'd solved a problem with Tridion publishing that had been caused by the publication path being incorrectly set on some publications. We talked about how useful it would be to be able to get a summary of the paths without having to open every publication. Time for a powershell one-liner! How about this?

$core.GetSystemWideList((new-object PublicationsFilterData)) | select-object -property Title,MultimediaPath,MultimediaURL,PublicationPath| Export-Csv -path c:\pubs.csv

Those of you who have been following along will notice that I have imported the core service namespace using the reflection module, but even on a bare Tridion system, you could type this easily enough. The CSV file can be opened up directly in Excel, and Bob's your uncle.